Outline for Today

Media

Transcript

Evaluation

Zoom Audio Transcript

-

My computer's not happy with me today so. i'm going to try and just focus on the board. how's everyone doing today. All right, well that's. that's saying something I think. So. This should be the right password for today. large enough free to see. i'll take a picture of this of the Board afterwards and post it.

-

yeah it's good for now. Okay.

-

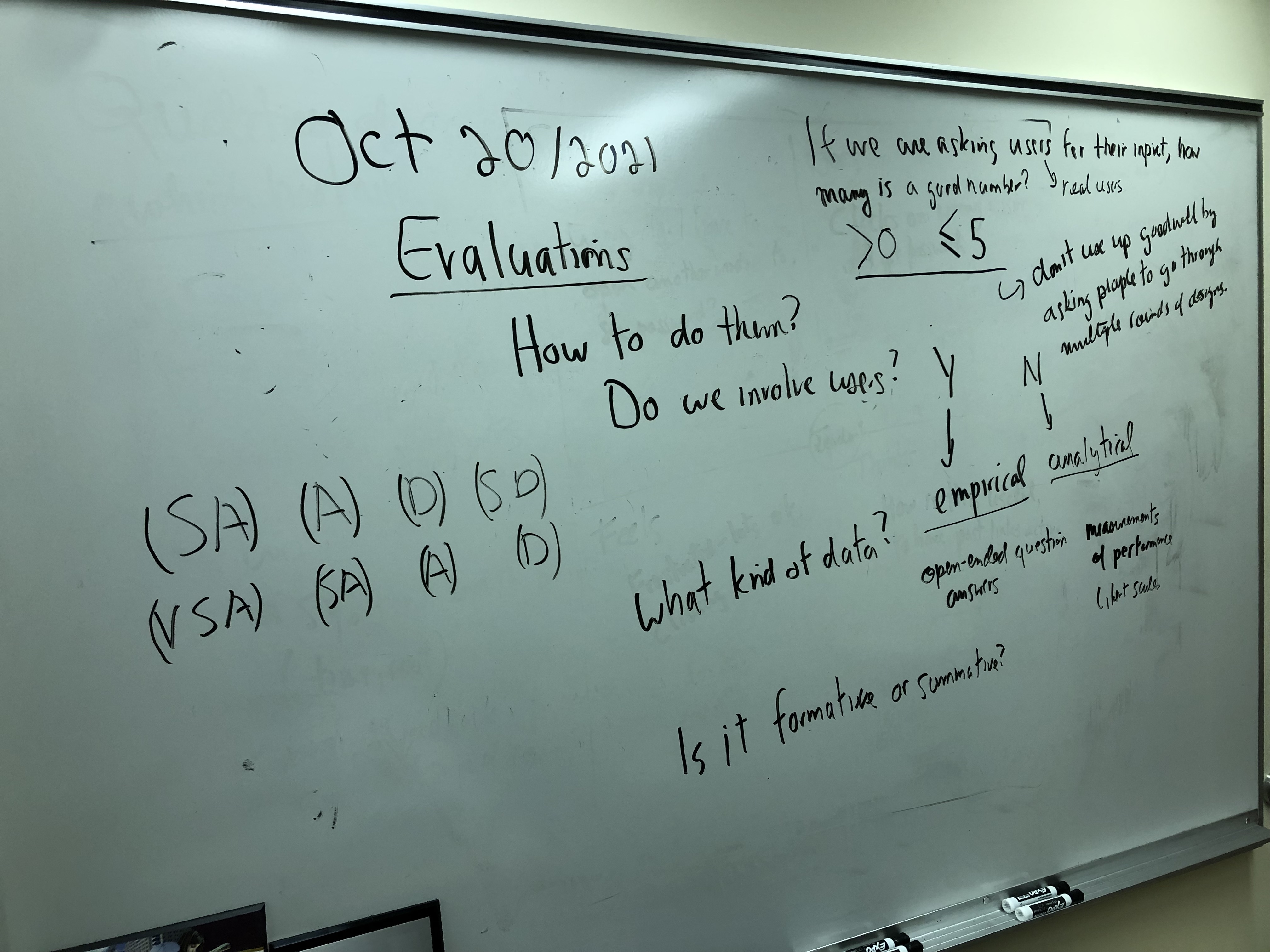

So we started talking about evaluation last day. And that's. So we want want to assess whether. Well there's a number of different ways we can evaluate things. So we're. And that helps us identify what needs to be done, what room there is for improvement. and gives us a benchmark to say when we've accomplished our. or goal of. Improving the design. of taking those opportunities to redesign to be found. and see whether or not. User experiences improved with the interface. So what are some ways we talked about looking at. winning interfaces last day. or just in general, what things that we. Were, how can we classify evaluation. So how can we, what are the choices we have for conducting an evaluation. So do we need to have users to do an evaluation.

-

yeah I mean maybe we can have users to evaluate our interface, or maybe we can also consider the experts to let it.

-

yeah so we have a choice we can use, we can have expert do a walk through the interface. We can. examine the interface design, according to the mystics we've talked about. So we don't need users for. That kind of approach. But then, if you want to say. You want to have users and sort of see what issues they've they encounter. that's another alternative so. If we have experts doing a walkthrough. They can identify issues with the. screen, but not. Not as it's being used. So we get a different perspective. So if we do have users involved, we.

-

Can.

-

If we have users involved, we collect data to see whether or not let's say they can. find a car to rent a zipcar to rent in their neighborhood. And they can identify the price. That they would need to pay or what the. plan. For them. That would best support them. In their use of use of the facility. If if they can ask those questions correctly. It so they can say, we can say yes, they do the task. or they had problems are and we can also look at the time it takes to complete the task. So it's not. So we can get empirical data miracle it's an empirical evaluation of all the users, then measuring their performance. So my off the screen here. I just thought that I would because i'm speaking, can you hear me all right. Yes, I would get the bigger window here.

-

you're doing all right.

-

Anyway, so. So we can do an empirical observation medical evaluation. Maybe another question to ask us. So we can either collect data that's. unstructured, we can say what did you think about what did you like best and i'll just interface. Or we can measure performance, how long did it take the person to complete the task, did they complete the task correctly, how many errors, did they make. Or how many clicks did they make along the way. Those kinds of things and we can ask can also collected data in the form of liker scales. can have items where people rate. To agree with the following statement, and you can say strongly agree agree. disagree strongly disagree. And notice so. We haven't even so. Are you familiar with liquored scale.

-

yeah.

-

that's. that's often used in. evaluations. different kinds. So we can have a choice. So first to get data out of out of the off from the scales, we can analyze we should. Have that the scale is balanced around the Center. So we have a true so strongly agree on one side and strongly disagree on the other side. Those are balanced around the middle of. not sure. And then same with agree and strongly agree and disagree, those are balanced around the battle. So if we have an even number of points. we're asking the responding to make a choice. Between agree or disagree. So we could have an odd number of points on the scale and then include. not sure in the middle era. So I. So there are implications for either choice mean if you don't. Give the middle point. Name then you're you're. getting people to. to choose which side of the fence they're on, so to speak. So they may they may not want to choose a side of the fence. So I guess it can depend, and then. You might like to give them the middle point. When. When that's. Like that's an option that you think is. reasonable one. So if we have the idea that points are equally spaced and they're bound centered around a balanced. On each side of the Center, then we can. do some. analysis, we can make some assumptions about the data we collect that way. We said. Instead of strongly agree. We disagree strongly disagree. Hello very strongly agree. strongly agree.

-

me.

-

So those two those two sets. aren't balanced around. So we're not doing ourselves any favors when we. collect data that way, so we should be careful about how we set up the instruments to collect data from people. So any other ways we can think about evaluation. The other questions, we can ask. Our saying up evaluations. So. One other kind of elimination I check whether its formative and summative so formative means that we can use the information to. focus on what needs to be done. And summative means that we're using the evaluation to. decide whether or not we've reached our goal. let's design is finished so. formative is done early in the process and summative can be done later on to make sure that we've. We made them the achieve the goals that we've set out for ourselves, so it makes sense. we're talking to users. So for asking. Users for their thoughts say that we have this low fidelity prototype on. and on with paper and pencil or pen. We wanted to go out and go through it and. and give us our. Give us their thoughts about it. Now, how many. How many users are good number to have. So you can start by saying, greater than zero. So. what's what's. what's the number or where we might start to see diminishing returns. Should we try and get 30 users to look at our paper and pencil prototype. be useful. Can you give me reaction, yes or no.

-

repeat the last question, I mean last. topic.

-

And said so much more than zero. is good. If we get anybody to look at the interfaces it's. To help to us, but is there. A number. Past which we. are getting the same value from the that information.

-

I think it's totally dependent on the target number of users, like if we're talking about an application. that's when I have limited usage. Like it could be even one user and. He he or she could be the sole evaluator. of that. Application prototype. But if we are talking about like a major application. we're going to have to try and check as many boxes possible. People. that's that would be feasible okay.

-

So.

-

So we want to think of the people were asking for input to be real users. So, for example, if we're. If I asked. You Jeffrey for your thoughts about this interfaces and designing for my nine year old mom. And you have a lot of suggestions about it. yeah it is your input, however well intentioned is that going to be.

-

I think.

-

Valuable is me talking to. My mom and her friends over the same age.

-

yeah I would. I would, I would say my evaluation might be less. But that's simply because i'm not an expert in that field. But. I would, I would say or argue the user is always right. So you would want to design it for the user or by the user and. In this case.

-

yeah so I just mean that. we're going to help ourselves if we can. Ask people for their input. If we if we do some work to to match the people were asking for input. With the target group of users, we have for the application with the interface.

-

yeah.

-

Because the textbook here mentions about. Evaluations not not involving users and it's. A case where you're you're trying to inspect different methods or trying to predict what the user behavior is going to be. And yeah it's. it's, it is a bit more difficult because you are not the user.

-

yeah. So if we're getting people to do. To do an inspection. So it's important that we, and we communicate, who, who is the intended user of the interface. it's people who are. living in a retirement home or visit people who are. undergraduate computer science students at a university. and so on.

-

But.

-

So thinking if we're getting user surveys to collect some data from them. We can collect data if we asked them to. collect qualitative data, asking them to tell us what they're thinking about when they look at the interface and. When the deciding which. Pictures of buttons to press on the paper on the screen that we mocked up for them. So that gets us some data as well. So. If we have one person to give us some feedback that's that's much better than having no people giving feedback. Because, no matter how well attention we are as a design as designers we're going to get. get caught in some traps where. We have some assumptions that. are not clearly articulated that. We think are valid, but the user may not see as valid so one person it's common can be really valuable and. highlighting some of those assumptions that. might get us may cause users to be stuck on the interface. But so so if we're thinking about. An application it's not say it's going to be used by students say huh say let's think about my my course web page design. So if I get one one student to comment on my design that helps me a lot. But we need to have. input from everybody in class.

-

depends, whether or not the student was wrong.

-

Okay, so i'm certainly not going to suggest to students are on. It I evaluate everyone's opinion. So let's imagine a case where we have. To show you the. paper and pencil version of the interface and ask you to. Think about how you navigate through it to find information and then I get I collect some of that I click that feedback data and i'm. Nick another iteration of. design and then I ask another group the same group of people. To give me feedback. And maybe let's say we go through a few different save it goes through three different designs in that way three iterations. So do I want to keep involving everybody in in evaluation. what's what's the downside. If I say well just give me your opinion about the third one. Now, after you give me opinions, for the first two. don't make me here. here's a question to think about is. Are people going to be as enthusiastic to. give some feedback about. The third step in this process, as they were the first time they were asked. So that makes sense that's a question. So just get us back on topic so I asked how. Many so if we can get input from one person that's great if we can get input from two people. that's really helpful. Three people. 456-789-1011 1213 1415. So. So there's there's some discussion on. A few places where they talked about I think five people to look at an interface design. Because, after that. They may. start to identify the same issues, and so you might not get so much new information, and if you don't use up the whole population of potential users one request for feedback, then you can use a different set of five people or five or 10 people. In the next time, and the next time, so you could do all three with different people. So it makes sense. So we also have the idea of. Think of making sure that we get people's free and informed consent. To participate so. So they're. So free and informed consent means that there is freely given consent and informed part means that they understand but they'll be asked to do and how the data will be used and so forth. So, in terms of the design of the participant pool that some of you are familiar with from computer science and also in psychology. Lucy will sit down off camera here can you still hear me all right.

-

Yes. Okay, thanks.

-

So the. way that setup is so even though i'm the Court meter for the participant pool and computer science so sometimes i'm involved in the studies that go on there. But. I keep an arm's length, so that i'm I don't know. who's participating. Until the end of the Semester when they get what the research credits, so that i'm not keeping track and saying well Jeffrey or national, whereas the Kenya. We really great if you participate in the study i'm sure i'm sure that would really help your market and the cost to. That doesn't give you an opportunity to get free and informed consent, as it. sounds like i'm telling you to do this, or else. which would be very wrong of me, which I would never do. That make sense.

-

yeah. Okay.

-

The same thing is so we have a research credits, you get a 1% bonus mark. If there's a research, study going on. In the Semester and you choose to apply it. You just choose to participate and apply it to the course. So he say 1% for each said you participate in this or not. we're trying to bounce. Acknowledging participation. But not making the rewards so great that you would. choose to do. So you would choose to participate even without. Really wanting to. know I really hate the idea of doing a study but participating in the study but it's 20% of my I can pass a classified. participate and I get 25 20% or 25% bonus mark. So that reward is not so one one said at 1% it's not. So great to. me make you behave differently than you would otherwise feel inclined to do so. But it's something so that you can still feel. acknowledged and you feel gratitude. From the department for participating and helping to further research department so. But what's the balance there but keeping pre and informed consent so again back to making too many requests to the same people. They may be supportive they understand what we're being asked to do, but. If they're being asked multiple times the same project, then. it's a little that may be a little less free of the few obligates to it. So that can affect the quality of the data. So. Yes, from from an. In the class perspective would be nice to meet maintain some goodwill amongst your user group. But then. Aside from that, sir. That social aspect is also the. selfish last back that if you follow the steps than the data, you get make the still better than we might be a better quality and give you. insight into other issues that he didn't. He wouldn't have seen if you start us capture the smaller group master multiple times. So it makes sense.

-

yeah.

-

Okay. Any questions about this.

-

I wanted to know a bit about formative and summative is it formative and summative how actually. define this.

-

Well, so if we're doing a formal evaluation then we're. might be more of an exploration of. What, what are the issues of the questions if we're talking to users, you might. ask them.

-

What.

-

Would you like about this interface, what do you. dislike about the interface or. what's what tasks are easy to accomplish what. tasks would you like to be able to do with this interface, you can do easily now. That might be one way to. Think about a form of. The evaluation. In a summative would be say okay. here imagine. You wanted to accomplish this task. So you use this interface design to accomplish this task. And then we could have we can collect data about. Whether. Good how well people completed the task, using the interface design we've completed, and if we. win or a goal, so there might might have. A time or a score. That we would say would indicate success. Or if we're thinking about like what kinds of questions. Would you. know if we have like an item like. an item like. Was this interface usable. For for your task.

-

And then.

-

You might say. rating, you know 75%. said agree or strongly agree. So in the forefront of case we're looking at. How do we. Know you're trying to identify the issues. And summative. Is. Have we succeeded in addressing the issues that we've identified. That. That help with your question.

-

yeah.

-

So then, so we've been talking a lot about having users so about empirical evaluation. If we're doing things like. walk through your aesthetic evaluation so we're just looking at the interface design. without necessarily seeing how it's being used by people. Then. Certain things might pop out. As we do an inspection. That maybe problem we see as being problematic. From the design of the interface. The might be that. It might be that. Those don't influence users as nearly as much as we think they might. When they're going through the interface with a particular task in mind to complete. So it's Nice, so we can do an analytical evaluation, without having to engage users. Not because we don't like. So we shouldn't be shouldn't be afraid of asking people for their thoughts about something. That we've designed, but. You could think about an analytical approach evaluation being done. As a step before we put in front of people. Or, in conjunction with empirical evaluation, so that we get. So that we can address. Our problem for me ankles. So what we're doing last day was an analytical approach with a keystroke level model. And, and also talking about fitts law.

-

anyway.

-

I think we're at a time now. yeah we're just a three 120 now. That makes sense. So I have office hours today, two to three. Did you see my note yesterday, but my office hours from two to three because of my dentist appointment. i'm just looking at the chat now which I didn't see. Exactly yeah so. We we'd like to make sure that we're. Getting a. sample or share a presentation of the population will be using it. And if we say. You know, they should be able to complete a task in two seconds let's say as it. As a number to us then. we'd like. In a summative evaluation to to do more people so that we can get some. more confidence in our measurement of the time to complete the task. So if we said. One person can complete the task in two seconds so then that's. It we we've done our job that's not necessarily. convincing evidence is it. So the formative evaluation. You know, we were saying what do you think about this interface that we've sketched on paper and pencil. So that that's an appropriate approach for formative evaluation, but then, with a summative evaluation that we'd like to be able to. have confidence that. That for for the intended user group. That we. Were reading an agreed upon standard so, then we need to have more people more data to give us the confidence. So that makes sense. OK. So now, I wonder is yeah it's good as a yes. my daughter Alice yeah to me and text messages so. For her, I think that that's the case sometimes she says yep ernie. Anyway, I digress. Thanks for hanging out. On zoom with me today, and if you have questions i'll be back my office hours zoom window. from two to three today, otherwise i've got another officer tomorrow one to two and then we'll see you on Friday so take care. stay well and. See you again soon.

-

Have a good day.

-

thanks you too.

Zoom Chat Transcript

-

Good afternoon Professor

-

Good Afternoon Professor

-

Alright

-

Good

-

not to bad

-

good

-

Student password

-

Student password

-

yes

-

yes

-

yes

-

yes

-

I think we should get input from a sample (fair representation of the population using it)

-

yeah

-

no

-

No

-

no

-

yeah

-

yes

-

Thank you for the class :)

-

thank you

-

thank you

Responses

What important concept or perspective did you encounter today?

- Discussed the topic of evaluation of a interface.

- In today’s meeting, we discussed about evaluation and its two kinds that is formative and summative.

- Today, professor talked about the topic interface evaluation of user and data , and their was two kinds of evaluation that is formative and summative

- In this session, Dr. Hepting started to talk about Evaluation and I learned so many new things in this regard.

- In today's lecture, we have learned about metaphors, web applications example, fitts law and GOMS KLM.

- We talked about the user inputs and response.

- Professor discussed about the evaluations of the interfaces. Evaluations can be done empirically. There are many ways to collect data in evaluation. Such as: open-ended questions, Likert scale. We need a fair amount of sample from the real users to perform the evaluation properly.

- Users evaluation of an interface and how to collect data and information and what kind of methods to use whilst collecting and interpreting data.

- user evaluation of designs

- Approach to evaluation, especially looking at it from the empirical and the analytical perspective

- Today, I understood two kinds of evaluation: formative and summative. Moreover, Professor also discussed the application of those kind in human computer interface.

- The most important thing I learned about today's class was about how crazily dependent we are on technology these days.

- today we have discussed the evaluation of the interface which includes how it gets evaluated and what factor matters in this process?

- In today's meeting we leant about the collection of data regarding user interaction and how it could help us in making better platforms

- Today's class was a reflection on how we are dependent on technology and how to talked about kinds of evolution formative and summative

- Today we talked about evaluation of design in detail.

- Process of evaluating the design

- In today's meeting, we discussed about the project and project's grade.

- Discussed a method for collecting and evaluating user feedback.

- Today's class was very interesting as we were teach in the old school way instead of learning it digitally, Teacher helped us learn it on the white board discussing about metaphors on Professors website and the issue with upcoming activity today. I am so interested to learn more in this way as it helps remember more than digitally (Screen share)

- Software design and estimation play the key role for software development process. Different methods are used for architecture design and detailed design evaluation. For architectural design stage a technique that allows selecting and evaluating suite of architectural patterns is proposed. It allows us to consistently evaluate the impact of specific patterns to software characteristics with a given functionality. Also the criterion of efficiency metric is proposed which helps us to evaluate architectural pa

Was there anything today that was difficult to understand?

- Acquiring a number of users representive of the target population for evaluation.

Was there anything today about which you would like to know more?

- We discussed about evaluation today which was nice but I want to know more about our next assignment which is due next week I think, so are we going to discuss that in class or should we just read the instructions on your website and start doing that?

- Can you explain the next group project to the class ?

- In today's meeting, the professor showed us the evaluation of users and data.

- Today I learned about evaluation and would like to know more about the process that goes into evaluating a interface.

- metaphors about snapchat still confused me, and would be interesting to touch up upon more

- i think the valuation discussion today was really interesting, I may be interested in having an example of the whole evaluation being applied into practice

- Discussion Doneee

- For today class, we talked about developing a framework to evaluate the search interface designs with recruiting participants. When we are designing consent forms for participants, we have to clarify the purpose of our study. I'm just wondering about the situation that we want to deceive participant during study. what about this situation?

- How to process and evaluate user input and data

- Is about improving interface design.

Wiki

Link to the UR Courses wiki page for this meeting