Basic Overfitting Phenomenon

Overfitting is bad.

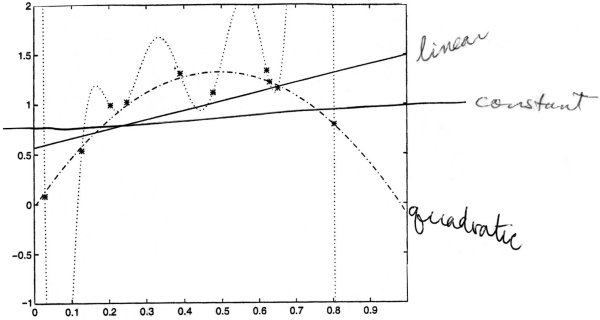

Example: polynomial regression

Suppose we use a hypothesis space, with many classes of functions

Given n = 10 training points (x1, y1)...(x10, y10).

Since n = 10, with 10 pieces of data, then in

Hn - 1 = H9

there is a function that gives an exact fit.

Which hypothesis would predict well on future examples?

- Although hypothesis h9 fits these 10 data exactly, it is unlikely to fit the next piece of data well.

When does empirical error minimization overfit?

Depends on the relationship between

- hypothesis class, H

- training sample size, t

- data generating process